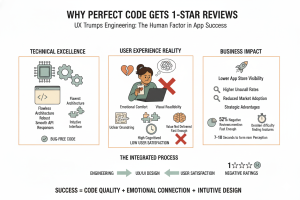

Even the most reliable, bug-free, and beautifully developed mobile application may still find itself with a long list of 1-star rated reviews. The discrepancy between technical achievements and user experience is not an exceptional case anymore. The current user does not consider code quality but the emotional comfort, visual readability, value delivery, and experience that a person is offered when using the app for the first time. In a rapidly changing market, a product with perfect code can fail simply because it does not align with users’ mindsets, behaviors, or perceptions of design symbols.

1. Code Quality Doesn’t Guarantee User Satisfaction

A development team may deliver flawless performance, smooth API responses, and robust architecture, yet feedback still reflects dissatisfaction. Users do not evaluate code—they evaluate moments, micro-interactions, and clarity. Even minor friction points, unclear UI logic, or intrusive flows can overshadow technical excellence. This phenomenon is why businesses today rely on insight-driven teams from an app development company that understand both engineering and behavioral patterns.

Why Users Feel Unhappy Despite Perfect Engineering

- Unclear Onboarding Journeys: When users cannot immediately understand what the app does, initial frustration grows quickly.

- Invisible UX Gaps: Smooth code doesn’t prevent emotional friction—poor labeling, inconsistent patterns, or confusing layouts cause instant drop-offs.

- Value Not Delivered Fast Enough: Users expect quick wins; an app that delays benefits feels inefficient regardless of its stability.

Business Impact

- Lower app store visibility due to rating algorithms.

- Higher uninstall rates within the first hour.

- Wider gap between development effort and market adoption.

2. UX Triggers That Lead to Negative Ratings

A perfectly coded app may underperform because of design triggers that users interpret differently. What seems “minimalist” to a team may feel “empty.” New users may find what developers refer to as “feature-rich” to be “complicated.” Today, companies often consult an app development firm to address behavioral insights beyond the code layer.

Common UX Triggers Behind 1-Star Reviews

- Unoptimized Navigation Structure: If the hierarchy doesn’t match user mental models, frustration occurs almost instantly.

- High Cognitive Load: Too many decisions or ambiguous paths make the experience tiring.

- Poor Feedback Loops: Missing animations, loading states, or haptic confirmations make an app feel broken.

Supporting Research Trends

- Studies reveal that 52% of app deletions happen due to UX friction, not performance.

- 40% of negative reviews mention difficulty finding key features.

- Users form a perception within 7–10 seconds of opening an app.

3. Expectations Shape Ratings More Than Functionality

Most users compare every digital experience to category leaders like Uber, Spotify, and Instagram. This means even niche products must meet global expectations in speed, consistency, and simplicity. Businesses collaborate with a mobile development company to align their interfaces with patterns users already trust.

What Drives User Expectations Today

- Benchmarks Set by Global Apps: Users expect the same smoothness and clarity even from smaller products.

- Real-World Usage Context: Poor network conditions, distractions, or shared devices distort perceived performance.

- Design Familiarity: When an app deviates from widely accepted UI norms, users assume it’s flawed.

Why Simpler Apps Sometimes Win

- Fewer features reduce cognitive load.

- Limited options streamline decision-making.

- Familiar layouts reduce learning time.

4. The Experience After Installation Matters More Than the Build

Even if the app passes QA flawlessly, post-installation friction shapes user emotions. This is where many teams discover that negative reviews stem from overlooked experience details, not shortcomings in engineering. Businesses often rely on a mobile app development company to refine journey maps and reduce these hidden pitfalls.

Key Post-Installation Factors Affecting Ratings

- Onboarding Length: Users uninstall apps that ask for too much too soon.

- Notification Sensitivity: Aggressive alerts immediately degrade sentiment.

- Accessibility Oversights: Missing contrast, poor tap targets, and weak typography hurt usability.

5. Design Language, Visual Hierarchy & Emotional Feedback

Great engineering becomes irrelevant if users struggle visually or emotionally. This is why teams partner with a mobile application design company to ensure that the interface communicates clearly and guides users effortlessly through tasks.

Critical Design Elements That Influence Reviews

- Hierarchy Clarity: Users must instantly see where to go next.

- Visual Rhythm: Consistency in spacing, alignment, and components creates comfort.

- Micro-Interactions: Small animations help users feel the app is responsive and reliable.

6. Trust, Security Cues & Perceived Professionalism

Apps that appear “basic” or “unfinished” visually—even if they are technically sound—lose trust. Thoughtful UI choices, polished interactions, and familiar patterns signal reliability. This is why product teams increasingly work with a mobile app development agency to refine micro-gaps that influence perception.

Elements That Build or Break Trust

- Visual polish

- Consistent iconography

- Predictable gestures

- Clear error messages

7. Why Product Teams Must Map Real User Behavior

Engineering insights alone cannot reveal emotional friction or perception gaps. User testing, usability mapping, and experience analytics are essential. Teams often collaborate with a mobile application development agency to create behavior-driven improvement cycles.

What Behavior Mapping Reveals

- Path deviations

- Confusion points

- Drop-off hotspots

- Feature misunderstanding

- Emotional reactions to UI patterns

8. Ratings Improve When UX, UI & Engineering Work Together

The strongest apps integrate design, engineering, psychology, and product strategy. Stability is only one ingredient. Users reward clarity, comfort, familiarity, and intuitive flows. Many enterprises now work with an enterprise mobile app development company to bridge these layers.

When Engineering and UX Are Aligned

- User trust increases

- Friction reduces

- Retention improves

- Ratings rise organically

9. Visual Identity & Interaction Quality Shape Long-Term Perception

An app can be functional yet feel unpolished. Seamless transitions, minimalist designs, and branded consistency influence the satisfaction of the user even after installation. This is where several companies will turn to a mobile application design firm in order to streamline and update the visual system.

Long-Term Design Factors

- Motion design approach

- Brand-consistent components

- Clear, readable type systems

- Accessible color schemes

Conclusion: Technical Excellence Is Only Half the Story

Even a perfectly coded application will not assure a good rating, as the perception of the users is influenced by the sense of clarity, emotional comfort, and intuitive interface rather than the quality of the engineering. When businesses match UX, UI, and technical implementation, they develop products that are easy to touch, trust, and feel valuable. The successful app is a well-constructed application that appeals to the actual user behavior and conveys the value immediately.

When you are enhancing an existing product or even developing a new product, you should focus on the integrated process of design-engineering. An easier and more natural experience can only result in better ratings and sustainable user satisfaction.